Enhancing Website Performance with AI-Powered Content Moderation

5 mins | 26 Sept 2025

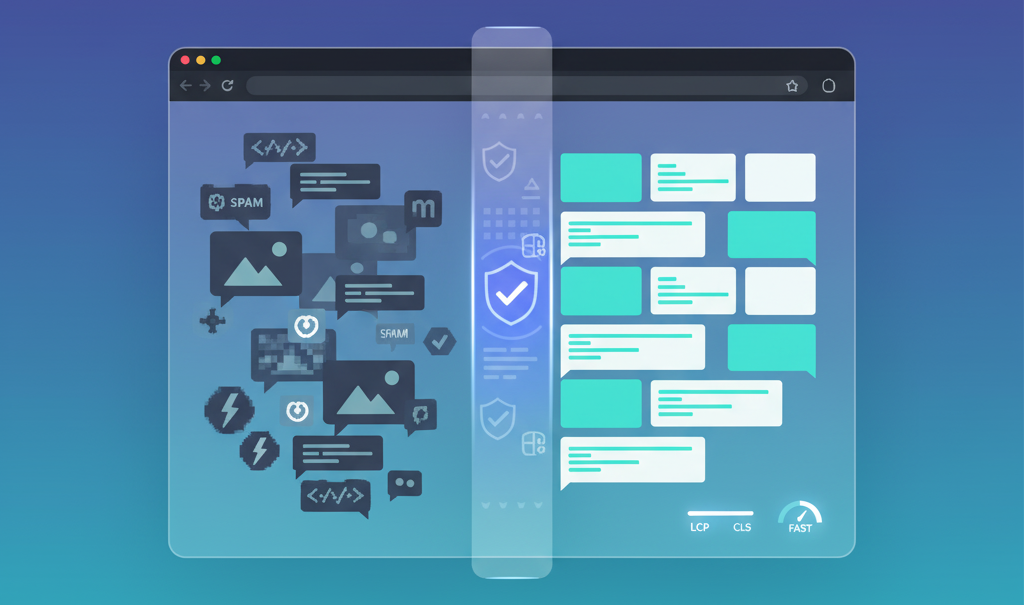

User-generated content (comments, reviews, posts) is the engine of many websites, but it can also slow pages with heavy media, slip in spam or harassment, and chip away at user trust. Left unchecked, it creates bloat, distracts real users, and adds work for support teams.

AI-powered content moderation uses machine-learning models to scan text, images, audio, and video in real time, flagging or holding content that appears risky, low-quality, or off-policy. Companies like 12Grids emphasize the importance of performance-focused solutions, and integrating AI moderation into a site’s infrastructure supports that goal by keeping pages lighter, layouts cleaner, and user experiences smoother.

Done well, moderation isn’t just about safety. By catching problems before they render and shaping what gets shown, it trims page weight, reduces noise, and keeps conversations constructive, which makes sites feel faster, sessions last longer, and communities healthier.

1) Why Moderation Shapes Performance and UX

a) The hidden cost of bad UGC

Spammy comments, link dumps, and oversized media add weight to pages and trigger extra network requests. That drag shows up in Core Web Vitals: large images slow LCP, unrestrained embedded shift layouts (CLS), and heavy scripts spike interaction delay (INP). Low-quality UGC also wastes crawl budgets by creating thin or near-duplicate URLs—think empty profiles, copy-paste reviews, and bot-made threads—so search engines spend time on pages that don’t help you rank.

Good moderation cuts this bloat at the source: throttle links for new accounts, cap file sizes, transcode images to modern formats, defer third-party embeds, and collapse duplicate threads. It also keeps templates clean—predictable component sizes, placeholders for pending media, and noindex rules for thin pages—so your layout stays stable and fast.

b) Trust → engagement → revenue

People engage more when they feel safe. Safer discussions lift time on page, comment quality, repeat visits, and review volume—signals that correlate with higher conversion rates and better ad viewability. Cleaner threads also help new visitors find real answers faster, which makes them more likely to register, subscribe, or buy.

Watch community health signals as early warnings: spikes in “report,” “mute,” or “block” actions often point to topics, users, or formats that need attention. Use these cues to adjust policies, raise friction for suspected bots, add moderator coverage during hot periods, or refine prompts and UI copy. Small fixes based on these signals compound into a smoother experience and steadier revenue.

2) How AI Moderation Works (Plain English)

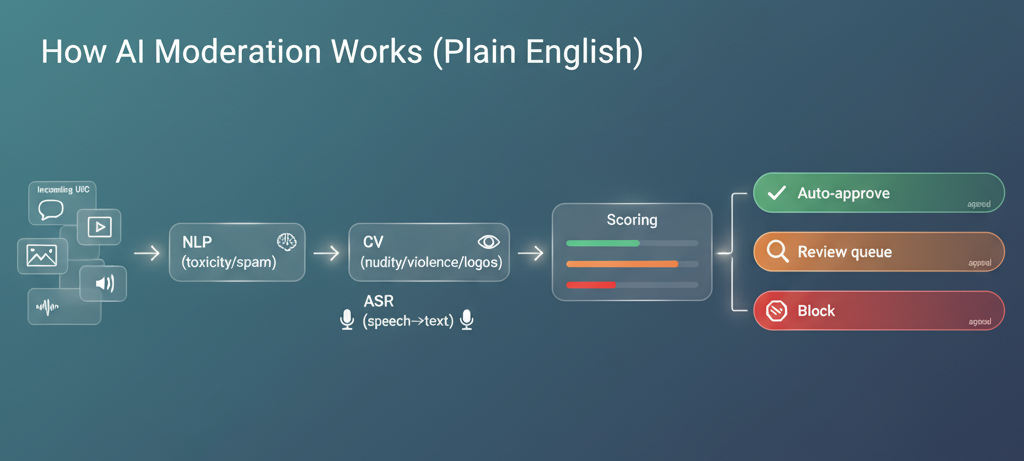

a) What the models look for

Modern systems review multiple content types at once. For example, when a nearshore software development company helps build moderation tools, the models are trained to flag text for toxicity, harassment, hate, self-harm cues, scams/phishing, and off-topic or low-effort spam.

- Text: flags for toxicity, harassment, hate, self-harm cues, scams/phishing, and off-topic or low-effort spam.

- Images: checks for nudity, violence, weaponry, graphic content, and brand/logo misuse.

- Video/Audio: speech is transcribed (ASR) and paired with visual analysis to spot risky scenes or claims embedded in voiceovers and captions.

They also handle multi-language input and context. A slur quoted in a news discussion isn’t the same as direct abuse; reclaimed terms, satire, and academic citations need different treatment. Good systems weigh context windows, user history, and conversation threads to avoid knee-jerk removals that harm healthy discussion.

b) Decisions and workflows

Models output a confidence score for each policy area. Your rules map those scores to actions: auto-approve low-risk posts, blur or label borderline media, downrank content pending review, queue complex cases for moderators, or block clear violations. If you’re evaluating vendors, look for an advanced AI content moderation tool that supports these granular actions with low latency.

A human-in-the-loop closes the gap on edge cases. Reviewer decisions feed back into training data, improving thresholds and category precision over time. Add simple appeal flows and transparent labels so users understand outcomes and can correct mistakes.

3) Implementation That Speeds Up Your Site

a) Where moderation runs in your stack

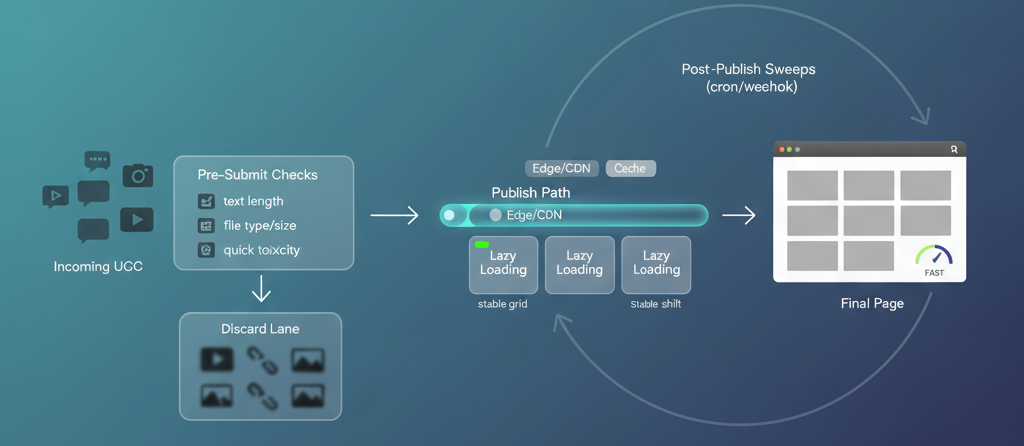

- Pre-submit checks (client or edge): validate text length, run a fast toxicity check, and preflight media (type/size) before upload so risky or oversized content never hits your origin.

- Post-publish sweeps: re-scan older assets during off-peak hours, run cron/batch jobs for archives, and subscribe to webhooks for real-time feeds (comments, reviews, chat) so new risks are caught quickly.

This approach pairs well with a performance-first build culture like 12Grids promotes, moderation is just one more gate that keeps the UI lean and dependable.

b) Lightweight UI patterns that feel fast

Use async checks with friendly states (“We’re checking your post…”) and optimistic updates that publish immediately but roll back if flagged. Cap media sizes, throttle submissions, and apply lazy loading and CDN caching to moderated assets to cut load time and bandwidth. Keep component dimensions fixed to avoid layout shifts, and defer third-party embeds until interaction.

For teams invested in clean, repeatable front-end patterns, align moderation components with your design and UX guidelines (see the mindset at 12Grids) so states, labels, and error handling look and feel consistent.

4) Measuring Impact and Staying Fair/Compliant

a) Metrics that matter

Track model quality and operations:

- Precision/recall, false-positive rate, time-to-decision, and reviewer SLA (how fast humans clear queues).

Tie results to product and business outcomes:

- Core Web Vitals (LCP, CLS, INP), bounce rate, CTR on UGC modules, session length, complaint volume/reports, and revenue per session.

- Compare A/B cohorts with and without pre-submit checks to quantify speed and engagement gains.

b) Policy, privacy, and fairness

Publish clear policies, keep audit logs, and offer appeals so users can contest mistakes. Run regular bias tests across languages and user groups, and document adjustments to thresholds or rules. For data handling, minimize PII, set sane retention windows, and respect regional rules (e.g., GDPR/CCPA) with straightforward wording, not legal jargon.

A strong moderation layer does more than keep harmful posts out. It trims page weight, keeps layouts stable, and protects conversations—payoffs you feel in faster loads, healthier threads, and steadier growth. When risky or low-quality content never reaches the UI, Core Web Vitals improve and users find what they came for without wading through noise.

If you’re ready to act, start small and measure:

- Audit one UGC surface (comments, reviews, forums) and map the top risk types.

- Pilot pre-submit checks for text and media, then add post-publish sweeps for older content.

- Set clear thresholds and escalation paths; give users transparent labels and an appeal route.

- Track model metrics (precision/recall, time-to-decision) alongside product metrics (LCP, CLS, INP, session length, complaints).

- Schedule periodic bias tests and review data retention so privacy and fairness stay on track.

Treat moderation as a performance feature, not just a safety net. With thoughtful policies, fast decision paths, and steady measurement, you’ll get lighter pages, better experiences, and a community people trust.

Author

Share

Share

Related

How 12Grids Transformed UPL’s Global Digital Presence: A Story of Scale, Trust & Technology

5 mins : 26 Nov 2025

Gen Alpha of Figma? A Revolutionary Update

4 mins : 29 Jun 2024

How to Design an Effective Website for a Target Audience

6 mins : 23 Oct 2023

Other Articles

Benefits of Custom Web Development and Web Design

4 mins : 14 Aug 2023

Build CTA using 10 creative and cool CSS button animations & hover effects

5 mins : 03 Oct 2022

9 Best Websites of Chemical Manufacturing Companies (2026 Edition)

6 mins : 16 Jan 2026